That’s a wrap on the “Legend of Heroes: Trails in the Sky” trilogy! Only took me about 14 years to finish all three entries, but who’s counting?

“The 3rd” was an interesting end to the trilogy. Because it’s not really wrapping up the story in “FC” and “SC,” the first and second chapters, respectively. The story there was wrapped in up “SC.”

It does take place six months after the events of “SC,” and features all the characters from the first two games, in familiar locations. “The 3rd” is almost like an additional story. It focuses on Father Kevin Graham of the Septian Church. He made his first appearance in “SC,” as a supporting character. As such, it was a bit surprising that “The 3rd” focused entirely on him and his story.

That surprise actually made me put it down the first time I tried playing “The 3rd.” Back in December of 2022, not long after I had finished “SC.” I think I was also a little burned out on JRPGs, given I finished both “FC” and “SC” that year, back-to-back.

Anyway, I don’t know what I was expecting, but I didn’t expect Kevin Graham, and his sidekick and foster sister Sister Ries Argent, to be the main characters. I thought I’d get to see more of Estelle and Joshua, the main characters of the first two “Trails in the Sky” entries.

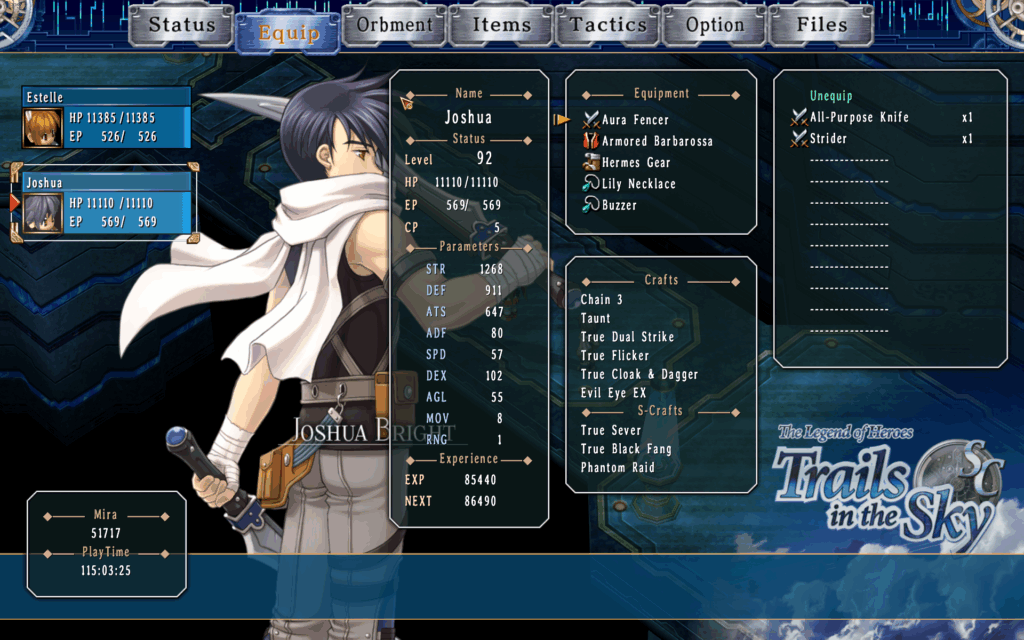

Which I did. Estelle and Joshua are playable characters in “The 3rd.” But they’re supporting characters only, in the same way Kevin was in “SC.”

I probably put in like 10 hours in that first attempt, but I wasn’t sure where the story was going. So I put it down until March of this year, when I restarted it. I decided I to do a full playthrough of the “Trails” series, so I had to finish “The 3rd.”

And I’m glad I did. The story overall was interesting. Many of the optional sidequests provided additional history and context for the various party members. The one for Renne, was particularly dark, but it explained why she is the way she is. Other sidequests gave more lore about the world. The history of orbal mechanics or nations and organizations within the world of Zemuria. One of the big strengths of the “Trails” series is its lore and worldbuilding, so I was glad to see even more of it.

I’m never trying to do a full synopsis or review with these posts, so I’ll start wrapping it up. Here are my “stats” for “Legend of Heroes: Trails in the Sky, The 3rd:”

- US Release: 2017-05-03

- Purchased on Steam: 2022-07-01

- Played on: PC (Steam) & Steam Deck

- Installed: December 2022

- Start Date: Attempt 1 – Dec 2022 // Attempt 2 – March 2025

- Time in-game based on Steam: 148.5 hrs (across both attempts)

- Time in-game based on Save Date: 108 hrs

- Completed: 2025-05-04

This was a long playthrough. But as usual, I went down the completionist route. I don’t think I necessarily achieved a perfect 100% completion, but I did finish all the sidequests. All the Moon Doors, Star Doors, and Sun Doors; even those that could be played multiple times at different difficulties. I may have looked at guides for some of those

And as I always do in JRPGs, I grinded. HARD. There were several nights where all I would do for 2-4 hours is run back and forth on a map, fighting mobs. For all the playable characters. Which worked out because the final bosses sequence definitely required use of all 16 party members. For what it’s worth, my final party consisted of Kevin, Ries, Colonel Alan Richard, and Senior Brace Scherazard Harvey.

With the “Trails in the Sky” trilogy completed, I’ve finished the “Liberal arc.” Liberl is the country where these three games take place. But now it’s time to move onto the “Crossbell arc,” with the first entry, “Legend of Heroes: Trails from Zero.” Hope to have a ‘Game Complete!’ post for it sometime this summer!